2 weeks ago, an intriguing leak occurred involving Google's Contentwarehouse API, revealing access to 2,596 different API modules used by Google.

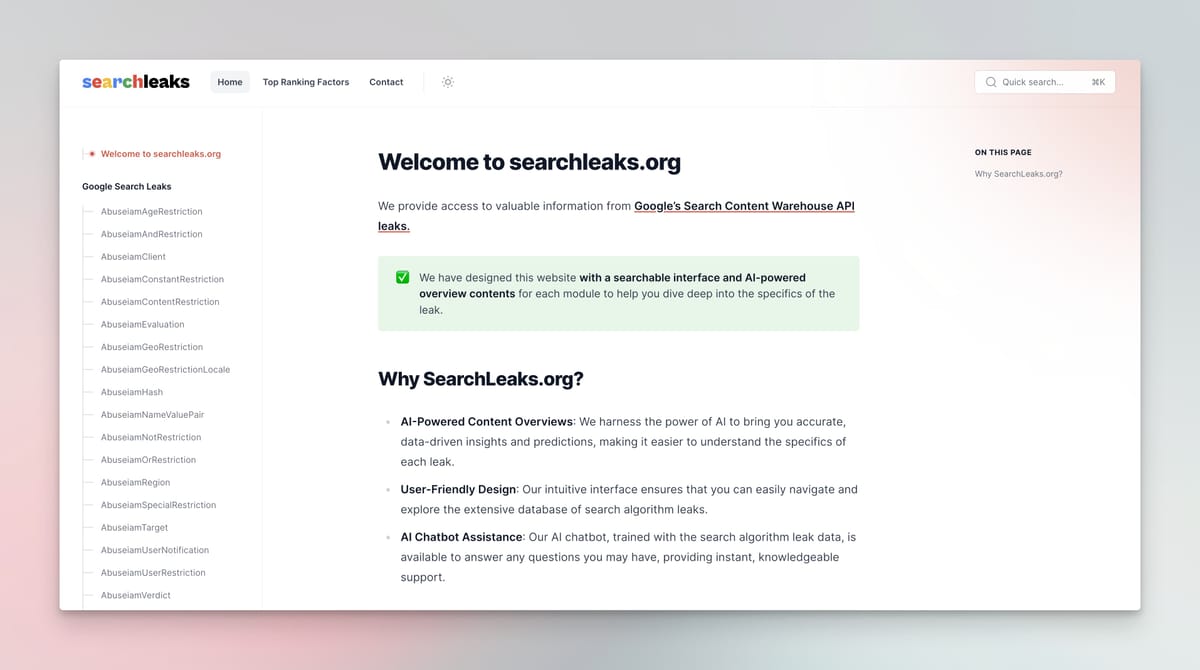

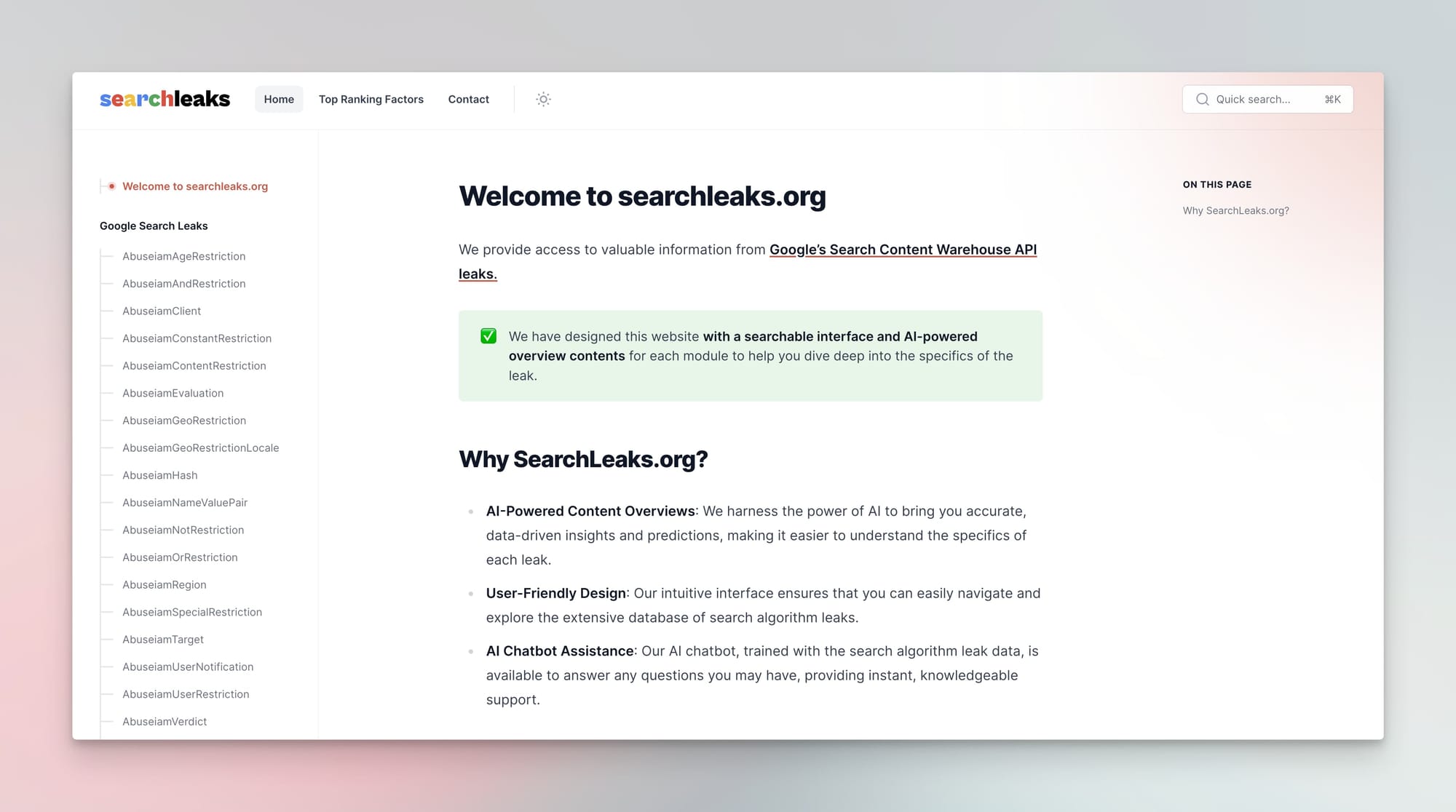

While many insightful analyses have been conducted since the leak, I saw an opportunity to create a directory website where these documents could be easily examined with a user-friendly interface and ai-powered overviews, so I built searchleaks.org

Searchleaks.org is designed to offer detailed information about each API module, including how this information influences ranking factors and other parameters, utilizing GPT-4 for AI analysis.

In my previous blog post, i summarized key ranking factors from the leak documents.

In this blog post, I’ll walk you through the process of launching this website entirely in 4 hours using no-code tools.

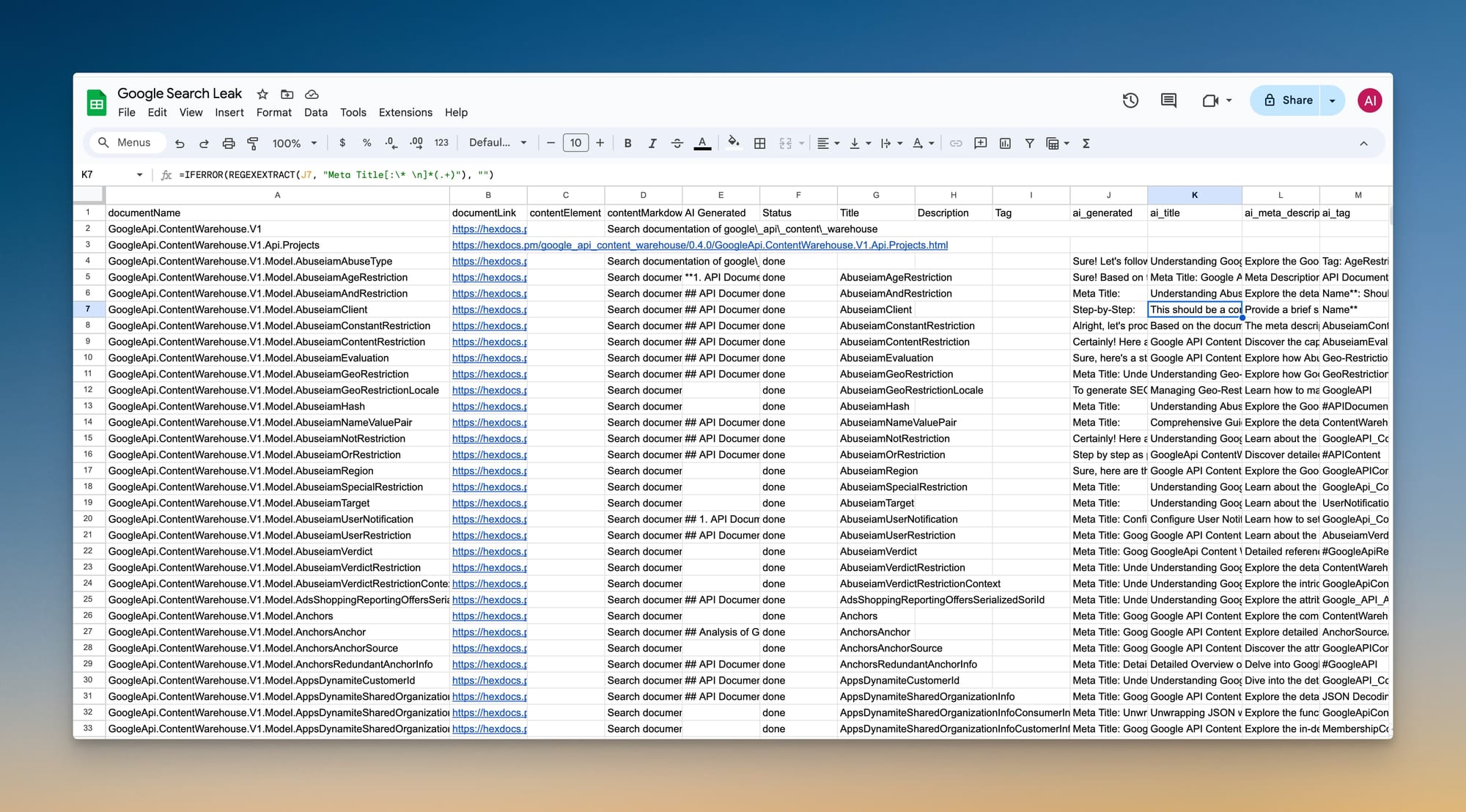

Step 1: Scraping the Google contentwarehouse documents

The first step involved scraping the leaked Google Contentwarehouse documents. I saved all the extracted data into a Google Sheet for easy access and manipulation.

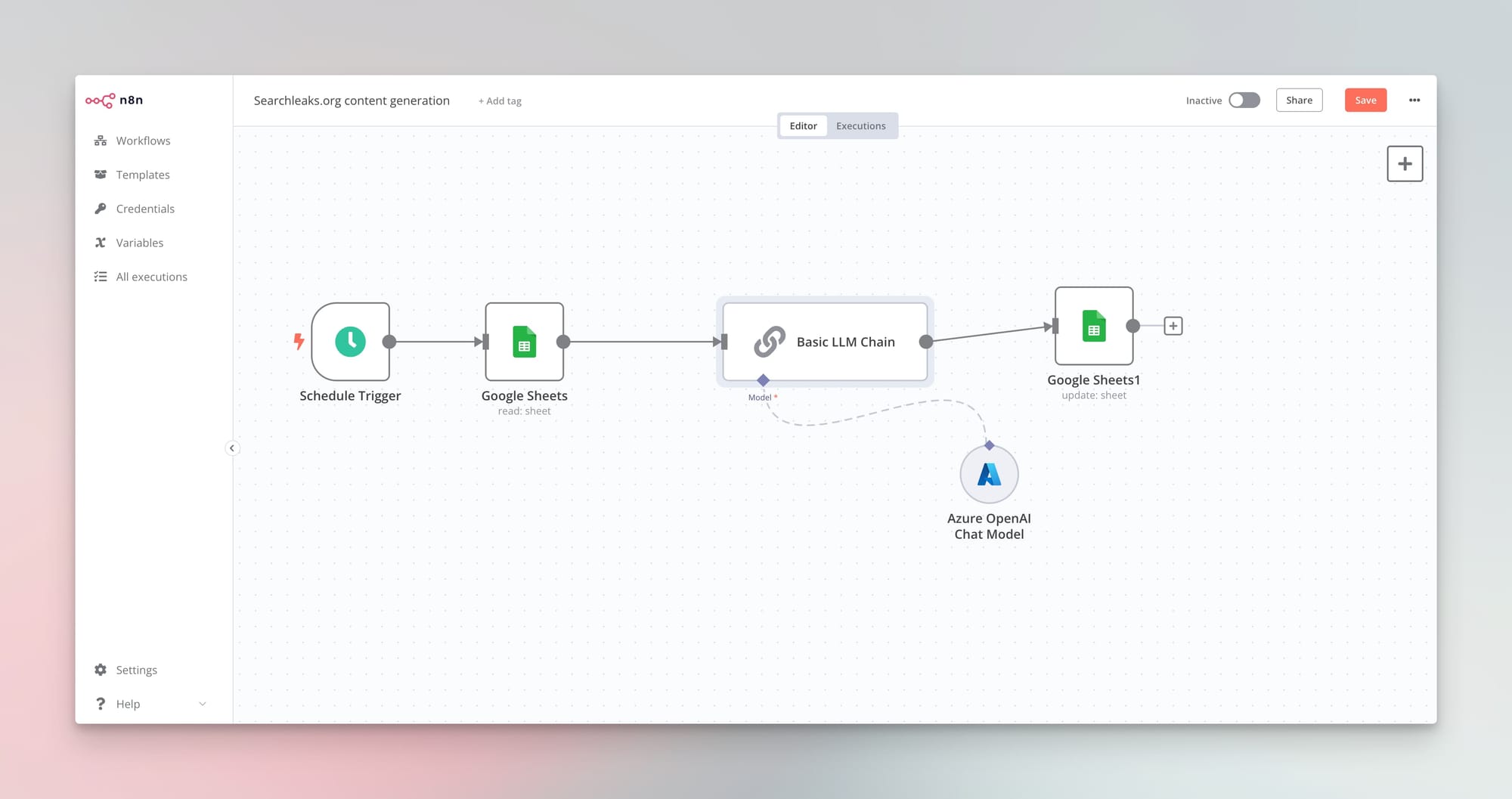

Step 2: Analyzing the documents and creating ai-powered overview contents

Next, I used the no-code automation tool, n8n, to send all the records from the Google Sheet to an AI system. I leveraged GPT-4o to analyze the content of each document using the following prompt:

Act as an SEO Expert

I will provide an API document that impacts on-page Google's ranking algorithms. Your task is to generate a comprehensive analysis, covering the purpose, influence on SEO ranking factors, and important considerations when using it. Additionally, include the original attributes from the provided document in your analysis.

Please provide an in-depth analysis of this API document, addressing the following points:

1. API Document Name:

2. Purpose of the Document:

3. How This Document Influences Google's Ranking Factors:

4. Key Considerations When Using This Document:

5. Original Attributes and important from the Document in proper markdown format:The AI-generated comments were then recorded back into the Google Sheet alongside the original document content.

Step 3: Setting up a new website with Ghost

For the website, I chose Ghost CMS due to its simplicity and efficiency. Setting up an open-source Ghost website took approximately 5 minutes. Ghost CMS is my favorite CMS because of its clean design and ease of use. It runs $4 droplet on hetzner.

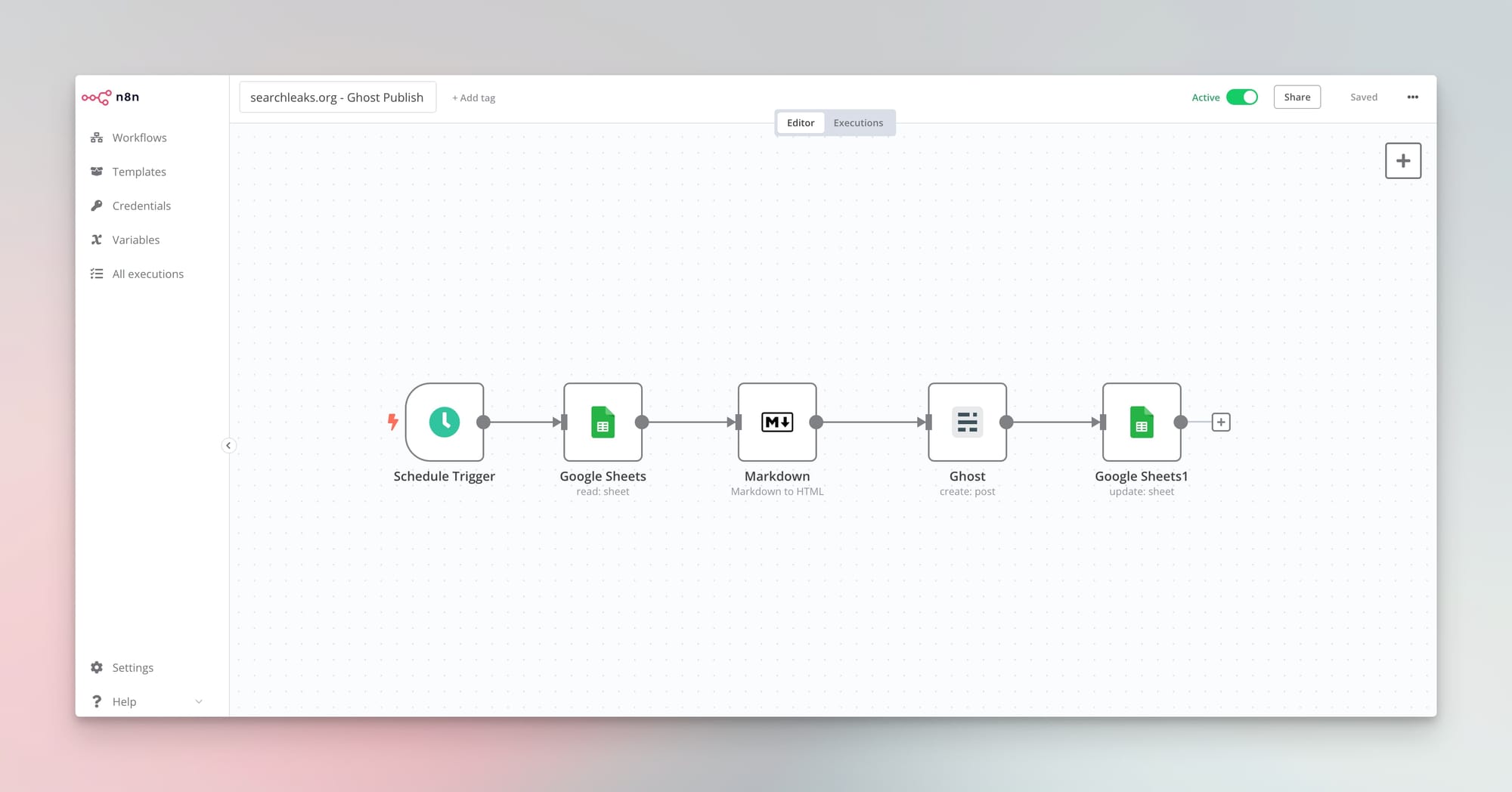

Step 4: Automating Content Entry with n8n

Using n8n automation, I entered all the contents into Ghost CMS. This step ensured that each document and its AI-generated analysis were uploaded systematically without manual intervention.

After uploading the content, I finalized the site by installing a suitable theme and adding essential pages such as the privacy policy and about page.

Summary

I combined my favorite CMS, Ghost, with n8n for automation and GPT-4 for AI interpretation of documents. This streamlined process allowed me to build a comprehensive, user-friendly directory of Google’s search leak modules in just 4 hours.

I hope searchleaks.org proves to be a useful resource for anyone looking to understand the intricacies of Google’s search algorithms and the impact of various API modules on search rankings.

Feel free to explore the site and share your feedback!